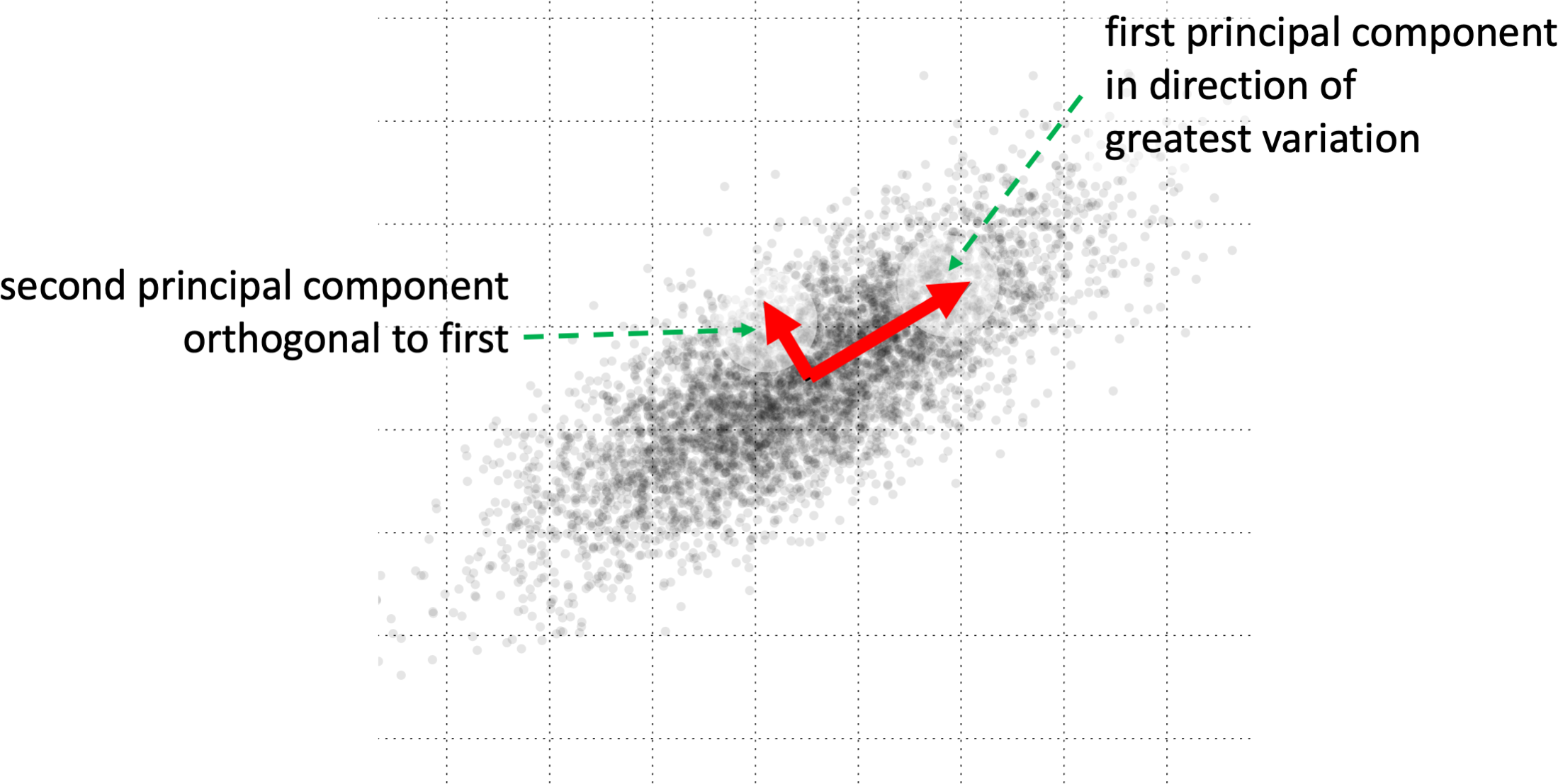

Principal components analysis attempts to find a smaller dimensional subspace that captures the majority of the varation in a dataset. It is a statistical technique that us used in various ways in AI. Witin explainable AI it may be used to help interpret complex data, such as internal layers of a deep neural network. It can also be used as a dimensional reduction technique during data preparation. One way to calculate the principal components of a data set is first to calculate the feature cross-correlation matrix, that is the matrix comprising of the correlations between different features. The principal components are then the N eigenvectors with the largest eignevalues. In. particular, the first principal component is the principal eigenvector. One can decide in advance how many principal components are required, or inspect them one by one choosing a point to stop where the eigenvalues drop below a chosen level.

Used in Chap. 7: pages 92, 96, 97, 100; Chap. 8: pages 107, 108; Chap. 9: pages 117, 129; Chap. 10: pages 137, 140; Chap. 13: page 202

Also known as principal components

Principal components for 2D data